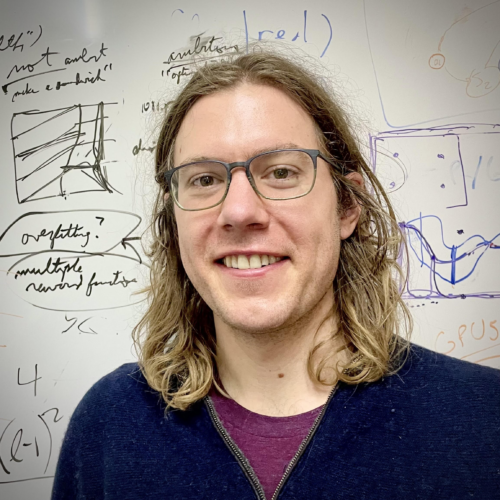

Assistant Professor in Machine Learning

Mila, University of Montreal 2024-

Mila, University of Montreal 2024- University of Cambridge 2021-2024

University of Cambridge 2021-2024David Krueger is an Assistant Professor in Robust, Reasoning, and Responsible AI at the University of Montreal and a Core Academic Member at Mila, the Quebec Artificial Intelligence Institute. He is the holder of a CIFAR AI Chair and the IVADO Professorship in Responsible AI.

David trained in Deep Learning under Yoshua Bengio, Roland Memisevic, and Aaron Courville from 2013-2021. He was an intern on Google DeepMind’s AI Safety in 2018. In 2023, he was a research director on the founding team of the UK AI Security Institute, and initiated the CAIS Statement on AI Risk.

In 2025, David founded Evitable, a nonprofit. Evitable's mission is to inform and organize the public to confront societal-scale risks of AI, and put an end to the reckless race to develop superintelligence.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "David’s work focuses on reducing societal-scale risks from AI, such as the risks of human extinction and gradual disempowerment. He has published dozens of academic papers about AI and has co-authored work with Yoshua Bengio, Geoffrey Hinton, and Jan Leike, among others.

His research spans many areas of AI, and includes seminal work on algorithmic manipulation, misgeneralization, robustness, AI governance, and learning from human preferences. An underlying theme of David's research is understanding how and why AI systems fail, and tracing that back to properties of the data and training process. Some notable publications include:

David is an outspoken advocate for regulation, social action, and international cooperation to prevent the development, deployment, and use of dangerous AI systems.

David has been featured in prominent media outlets, including ITV's Good Morning Britain, Nature, Al Jazeera's Inside Story, New Scientist, and the Associated Press. David has also discussed his work and the risks from AI on podcasts, including Nonzero, The Inside View, Toward Data Science and Le Pod.

For media inquiries and interview requests, please contact: press@davidscottkrueger.com